AI is rapidly transforming industries, but its magic relies on a solid foundation: AI infrastructure. This complex ecosystem of hardware, software, and services provides the backbone for developing, training, and deploying AI models. Understanding AI infrastructure is crucial for businesses looking to leverage the power of artificial intelligence effectively and efficiently. This post will explore the key components of AI infrastructure, providing a comprehensive guide for navigating this evolving landscape.

Understanding the Core Components of AI Infrastructure

AI infrastructure isn’t just about fancy computers; it’s a carefully orchestrated combination of resources working in harmony. Let’s break down the essential elements:

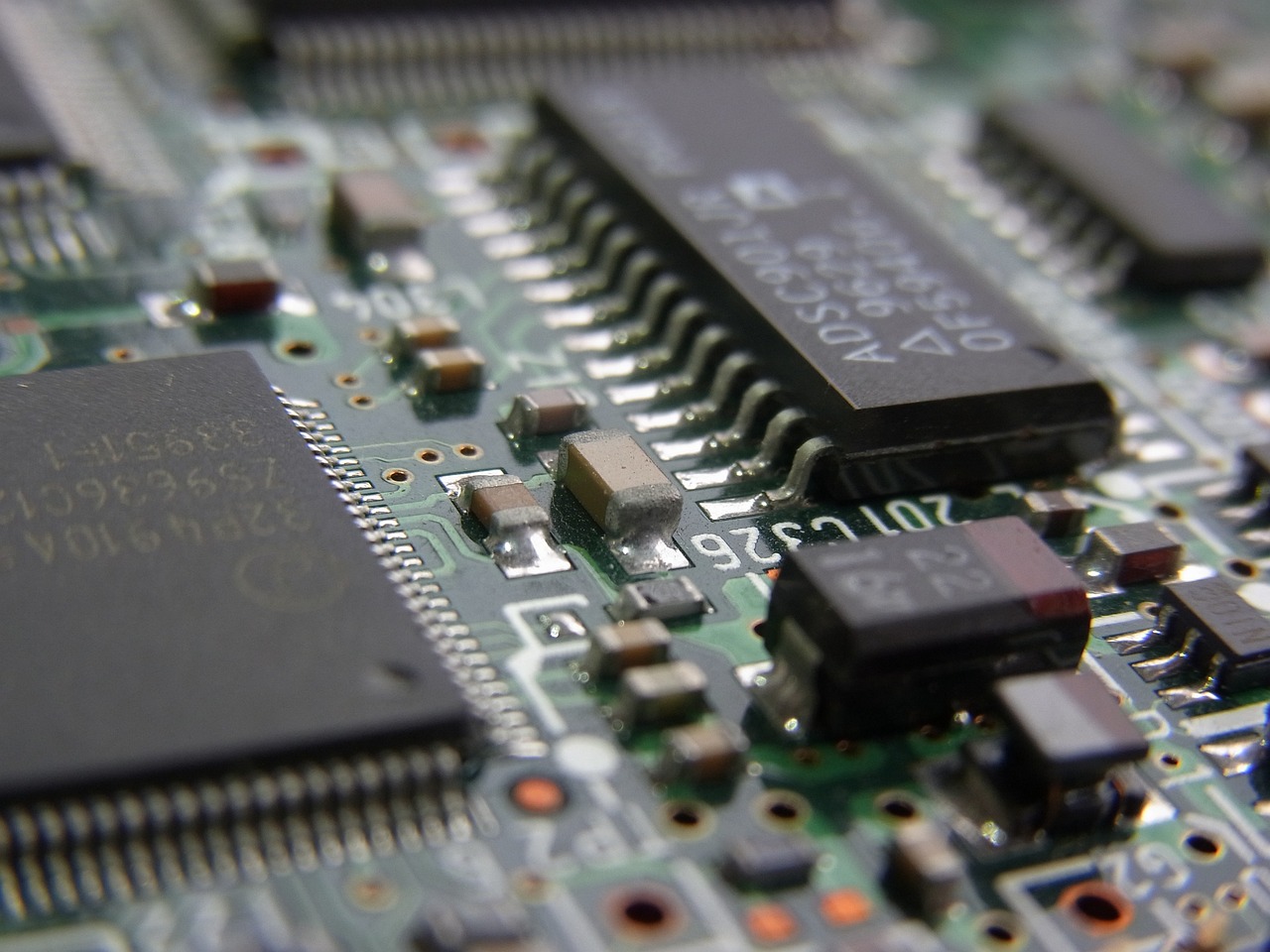

Hardware Infrastructure: Powering the AI Engine

The hardware is the physical backbone of any AI system. The sheer computational power required for AI tasks necessitates specialized hardware components.

- Processing Units:

GPUs (Graphics Processing Units): These are highly parallel processors optimized for matrix operations, making them ideal for deep learning. Companies like NVIDIA and AMD dominate this space. Example: NVIDIA’s A100 GPUs are commonly used in data centers for training large language models.

CPUs (Central Processing Units): While GPUs handle the heavy lifting of AI model training, CPUs manage general-purpose tasks, data preprocessing, and model deployment in some scenarios. Intel and AMD are leading CPU manufacturers.

TPUs (Tensor Processing Units): Developed by Google, TPUs are custom-designed ASICs (Application-Specific Integrated Circuits) specifically for TensorFlow workloads. They offer significant performance advantages for certain AI models.

- Memory: AI models, especially large ones, require substantial memory capacity.

RAM (Random Access Memory): Large RAM capacity is essential for holding data during training and inference. High-bandwidth memory (HBM) is often preferred for demanding AI applications.

Storage: AI datasets can be massive.

SSD (Solid State Drives): Used for fast access to frequently used data.

HDD (Hard Disk Drives): Used for storing large datasets at a lower cost.

Object Storage: Cloud-based object storage (e.g., AWS S3, Azure Blob Storage) provides scalable and cost-effective storage for massive datasets.

- Networking: High-speed, low-latency networking is crucial for distributing AI workloads across multiple servers or cloud instances.

Ethernet: Gigabit Ethernet is a minimum requirement, with 10GbE or higher preferred.

Infiniband: A high-performance interconnect technology commonly used in high-performance computing (HPC) and AI clusters.

Software Infrastructure: Orchestrating the AI Workflow

Software is the glue that binds the hardware components together and enables AI model development and deployment.

- Operating Systems: Linux distributions like Ubuntu, CentOS, and Red Hat are widely used due to their flexibility, open-source nature, and strong support for AI tools.

- Containerization: Docker and Kubernetes are essential for packaging AI applications and deploying them across different environments (e.g., development, testing, production). Containerization simplifies deployment, ensures consistency, and improves resource utilization.

- AI Frameworks: These frameworks provide pre-built functions and tools for building and training AI models.

TensorFlow: An open-source framework developed by Google.

PyTorch: An open-source framework developed by Facebook (Meta).

Keras: A high-level API that runs on top of TensorFlow or other backends.

- Data Management Tools:

Data Lakes: Centralized repositories for storing vast amounts of raw data in various formats (e.g., Hadoop, AWS S3, Azure Data Lake Storage).

Data Warehouses: Structured repositories for storing processed and filtered data (e.g., Snowflake, Amazon Redshift).

Databases: Relational (e.g., PostgreSQL, MySQL) and NoSQL (e.g., MongoDB, Cassandra) databases are used for storing and managing structured and unstructured data.

- MLOps Tools: These tools automate and streamline the AI development lifecycle.

Model Training Platforms: AWS SageMaker, Azure Machine Learning, Google Cloud AI Platform.

Model Deployment Tools: Kubeflow, MLflow.

Model Monitoring Tools: Prometheus, Grafana, ELK stack.

Cloud vs. On-Premise AI Infrastructure: Choosing the Right Path

Businesses must decide whether to build their own AI infrastructure on-premise or leverage cloud-based solutions. Each approach has its advantages and disadvantages.

- On-Premise:

Pros: Greater control over data security, compliance, and hardware customization. Potentially lower long-term costs for predictable and consistent workloads.

Cons: High upfront capital expenditure, ongoing maintenance and operational costs, limited scalability.

- Cloud:

Pros: Scalability on demand, pay-as-you-go pricing, access to a wide range of AI services and tools, reduced operational burden.

Cons: Potential security and compliance concerns, vendor lock-in, ongoing costs can be high for demanding workloads.

- Hybrid: Combining on-premise and cloud resources can provide the best of both worlds, allowing businesses to leverage the cloud for burst capacity and experimentation while keeping sensitive data and critical applications on-premise.

Building an Effective AI Infrastructure Strategy

Building a successful AI infrastructure requires careful planning and consideration of various factors.

Define Your AI Use Cases and Requirements

Before investing in any infrastructure, clearly define the AI use cases you want to address and their specific requirements.

- Types of AI models: Different models require different types of hardware and software. For example, training large language models requires powerful GPUs and large amounts of memory.

- Data volumes and velocity: Consider the amount of data you’ll be processing and the speed at which it’s generated. This will influence your storage and networking requirements.

- Performance requirements: Define the desired accuracy, latency, and throughput for your AI applications.

- Budget constraints: Balance your performance requirements with your budget.

Choose the Right Technologies and Tools

Selecting the right technologies and tools is crucial for building an efficient and scalable AI infrastructure.

- Evaluate different options: Carefully evaluate different hardware, software, and cloud services based on your specific requirements.

- Consider open-source vs. proprietary solutions: Open-source tools offer greater flexibility and customization, while proprietary solutions often provide better support and integration.

- Prioritize interoperability: Ensure that your chosen technologies can seamlessly integrate with each other.

Implement Robust Security Measures

AI infrastructure handles sensitive data, making security a top priority.

- Data encryption: Encrypt data at rest and in transit.

- Access control: Implement strict access control policies to limit access to sensitive data.

- Vulnerability management: Regularly scan your infrastructure for vulnerabilities and apply patches promptly.

- Compliance: Ensure that your infrastructure complies with relevant regulations (e.g., GDPR, HIPAA).

Monitor and Optimize Performance

Continuously monitor the performance of your AI infrastructure and identify areas for optimization.

- Monitoring tools: Use monitoring tools to track resource utilization, performance metrics, and errors.

- Performance tuning: Optimize your hardware and software configurations to improve performance.

- Scalability testing: Regularly test the scalability of your infrastructure to ensure that it can handle increasing workloads.

- Example: A financial institution planning to use AI for fraud detection would need to consider:

- Use Case: Fraud detection with near real-time response.

- Requirements: High-performance GPUs for training and inference, low-latency networking, and robust security measures to protect sensitive financial data.

- Possible Solution: A hybrid cloud approach with on-premise GPUs for low-latency inference and cloud-based data storage and model training.

Future Trends in AI Infrastructure

The field of AI infrastructure is constantly evolving. Here are some key trends to watch:

- Edge AI: Deploying AI models on edge devices (e.g., smartphones, sensors) to reduce latency and improve privacy.

- Specialized AI Chips: Development of new chips optimized for specific AI tasks, such as natural language processing and computer vision.

- Quantum Computing: Exploring the potential of quantum computers to accelerate AI model training.

- AI-powered Infrastructure Management: Using AI to automate the management and optimization of AI infrastructure.

- Serverless AI: Deploying AI models as serverless functions, eliminating the need to manage servers.

Conclusion

Building and managing AI infrastructure is a complex but crucial undertaking for any organization looking to leverage the power of artificial intelligence. By understanding the core components, choosing the right technologies, and implementing robust security measures, businesses can create a solid foundation for their AI initiatives and unlock significant value. Keeping an eye on future trends will further position them for success in this rapidly evolving field.